# How Can We Make Robotics More Like Generative Modeling?

## Metadata

- Author: [[Eric Jang]]

- Full Title: How Can We Make Robotics More Like Generative Modeling?

- Category: #articles

- Document Tags: [[machine learning]] [[Machine Learning]]

- URL: https://evjang.com/2022/07/23/robotics-generative.html

## Highlights

- Diverse data can help your neural networks handle situations not seen at training time. In the broader context of machine learning, people call this “out of distribution generalization (OoD)”. In robotics we call it “operating in unstructured environments”. They literally mean the same thing. ([View Highlight](https://read.readwise.io/read/01h5ekkdqje5wdpvv4kpxn0cac))

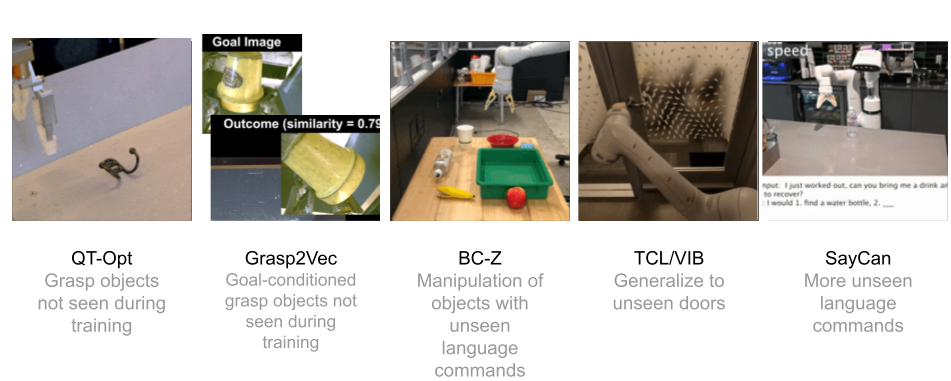

- We believe in the simplicity and elegance of deep learning methods, and evidence from the last decade has shown that the recipe works. Here are some examples of large-scale learning robots I’ve built while I was at Google Brain:

([View Highlight](https://read.readwise.io/read/01h5ekmmb4sezz1wvmk9yexzsy))

- What really matters is that once you have a large diverse dataset, almost any mix of learning techniques (supervised, unsupervised, offline RL, model-based) should all work ([View Highlight](https://read.readwise.io/read/01h5eknbryhsbs4mxgy1b58ta3))

- Generative Modeling is not just about rendering pretty pictures or generating large amounts of text. It’s a framework with which we can understand *all* of probabilistic machine learning. There are just two core questions:

1. How many bits are you modeling?

2. How well can you model them? ([View Highlight](https://read.readwise.io/read/01h5ekr6jc91bb8yqx5h78k60e))

- Generally, modeling more bits requires more compute to capture those conditional probabilities, and that’s why we see models being scaled up. More bits also confers more bits of label supervision and more expressive outputs. ([View Highlight](https://read.readwise.io/read/01h5ektjz5c9gt0fjqc7b4gh96))

- I asked DALL-E 2 to draw “a pack mule standing on top of a giant wave”, and this is how I think of generative modeling taking advantage of the Bitter Lesson. You have a huge wave of compute, you have a “workhorse” that is a large transformer, or a modern resnet, and at the very top you can choose whatever algorithm you like for modeling: VQVAE, Diffusion, GAN, Autoregressive, et cetera. The algorithmic details matter today but they probably won’t in a few years once compute lifts all boats; Scale and good architectures is what enables all that progress in the long term. ([View Highlight](https://read.readwise.io/read/01h5ekywgfesseysvewkz3y9kg))

## New highlights added July 16, 2023 at 8:46 AM

- Most people who are interested in contributing to robotics don’t necessarily *move* robots; they might train vision representations and architectures that might *eventually* be useful for a robot. Of course, the downside to de-coupling is that improvements in perceptual benchmarks do not always map to improvements in robotic capability. For example, if you’re improving mAP metric on semantic segmentation or video classification accuracy, or even lossless compression benchmark - which in theory should contribute something eventually - you won’t know how improvements in representation objectives actually map to improvements in the downstream task. You have to eventually test on the end-to-end system to see where the real bottlenecks are. ([View Highlight](https://read.readwise.io/read/01h5fcser60vsdgb9zpahfzq9x))

- There’s a cool paper I like from Google called [“Challenging Common Assumptions in Unsupervised Learning of Disentangled Representations”](https://arxiv.org/abs/1811.12359), where they demonstrate that many completely unsupervised representation learning methods don’t confer significant performance improvements in downstream tasks, unless you are performing evaluation and model selection with the final downstream criteria you care about. ([View Highlight](https://read.readwise.io/read/01h5fcsxmkgb33nhje8nyseqz4))