# The Evolution of Chips

## Metadata

- Author: [[Anna-Sofia Lesiv]]

- Full Title: The Evolution of Chips

- Category: #articles

- URL: https://contrary.com/foundations-and-frontiers/evolution-of-chips

## Highlights

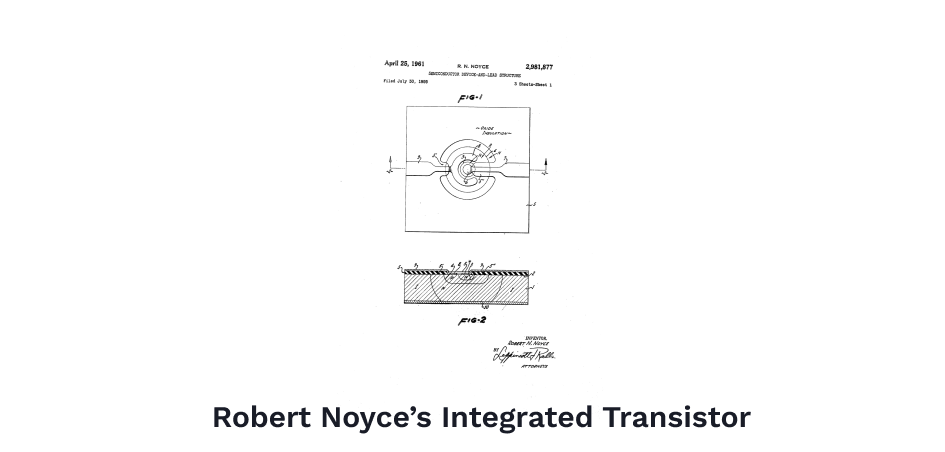

- Rather than spend hours hunched over a soldering iron, Robert Noyce figured it might be a better idea to etch the complete circuit, transistors, wires and all, onto one solid wafer of semiconductor material. The integrated circuit was born. "I was lazy," said Noyce, reflecting on his bout of genius, "it just didn't make sense having people soldering together these individual components." ([View Highlight](https://read.readwise.io/read/01h37m7xxzxb8xds72f4zemhmg))

-  ([View Highlight](https://read.readwise.io/read/01h37m8477gdchg62n18dwfye8))

- In perhaps the greatest example of economies of scale in history, the tinier transistors got, the more computation you could do for less power. The economics of miniaturization were so good they were almost unbelievable. By scaling down the size of a transistor by two, you scaled up the computational power by a factor of eight! ([View Highlight](https://read.readwise.io/read/01h37mc3tjnw9nax3tjw9qb9re))

- Intel's first product was the 1103 — a semiconductor-based memory chip. Transistors would open and close to either store or release an electric charge, thereby storing or releasing information. ([View Highlight](https://read.readwise.io/read/01h37mdzzwfxx65ht82tav5fd5))

- The Altair 8800, released in 1975, was the first attempt at a 'personal computer.' It was powered by the Intel 8080 chip, had only 250 bytes of memory, and could be programmed by flipping a bunch of mechanical switches. Remarkably, 25,000 Altairs 8800s were sold for $1,000, just enough for the producers to break even. The Altair may not have had much staying power, but in the wake of its rollout, things moved fast.

Later that year, Bill Gates and Paul Allen launched Microsoft, whose first product was an interpreter that helped users program their Altair 8800s. Two years later, in 1977, Steve Wozniak and Steve Jobs released the Apple II, a personal computer decked out with a monitor and the first commercially available graphical user interface, and powered by the MOS 6502 CPU, a chip rivaling Intel's. ([View Highlight](https://read.readwise.io/read/01h37mfvkf8ft1ch02ewj08agk))

- “The semiconductor guys don’t know much about computers so they’ve copied a bunch of ancient architectures. So what we have in the personal computers is creaky old architectures with this wonderful [semiconductor] technology. So the people driving the technology don’t know anything about systems and the people who have built traditional large computer systems haven’t participated in the [semiconductor] technology revolution," he said. ([View Highlight](https://read.readwise.io/read/01h37mzt2yjq5ezje1geezhg79))

- Note: "He" is Carver Mead

- If you want to compute something really fast, you probably don't have time for the software you write to be compiled into x86 machine code and then executed. Instead, Carver Mead suggests that you map your program directly into the silicon. "The technology for doing that we understand pretty well. We’ve developed the technology for taking high-level descriptions and putting them on silicon. It’s called silicon compilation, which is the obvious next step. You need to compile things right down into the chip instead of compiling them into some code that you feed to this giant mass of chips that grinds away on it trying to interpret it,” said Mead.

The concept of producing application-specific integrated circuits is exactly what transpired. They're called ASICs for short. The most popularly recognizable variety of ASIC is probably the Bitcoin miner. These are processor chips whose circuits are designed specifically so they can be hyper-efficient at running the SHA-256 algorithm, and thereby mine Bitcoin. ([View Highlight](https://read.readwise.io/read/01h37n18ehnkz8vzs0eaadzrpw))

- Designing microelectronics will have to become synonymous with computer science, and the gap in understanding between hardware and software will need to close. As the great computer scientist Alan Kay once said, "people who are really serious about software should make their own hardware." ([View Highlight](https://read.readwise.io/read/01h37n5fd56h0vs4qhxb67v2qt))